本文主要包括作业的三个部分,Part 1: 读入BVH文件的人体结构,Part 2:前向运动学计算,Part 3:运动重定向。

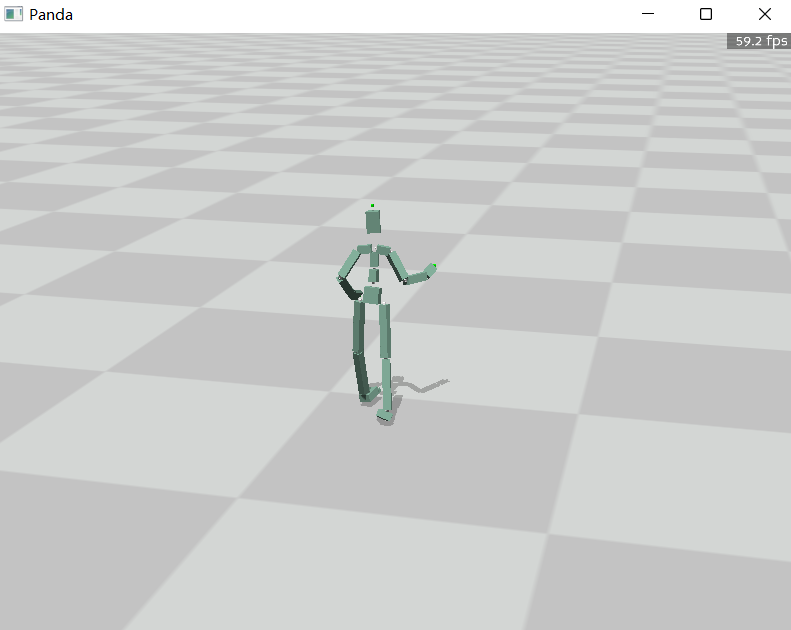

Part 1: 读入BVH文件的人体结构

关于BVH文件的结构介绍,作业中已经有了相关链接。我们只需要一行行的读取文件并处理就好了。其实对我来说,最麻烦的部分在于用Python写程序,我并不是很熟悉Python,所以很大一部分时间花在熟悉Python使用上。

def part1_calculate_T_pose(bvh_file_path):

"""请填写以下内容

输入: bvh 文件路径

输出:

joint_name: List[str],字符串列表,包含着所有关节的名字

joint_parent: List[int],整数列表,包含着所有关节的父关节的索引,根节点的父关节索引为-1

joint_offset: np.ndarray,形状为(M, 3)的numpy数组,包含着所有关节的偏移量

Tips:

joint_name顺序应该和bvh一致

"""

joint_name = []

joint_parent = []

joint_channel_count = []

joint_channel = []

joint_offset = []

lines = []

joint_stack = []

# read file

with open(bvh_file_path, 'r') as f:

lines = f.readlines()

#parse file

for i in range(len(lines)):

line = [name for name in lines[i].split()]

if line[0] == "HIERARCHY":

continue

elif line[0] == "MOTION":

break

elif line[0] == "CHANNELS":

joint_channel_count.append(int(line[1]))

joint_channel.append(line[2:len(line)])

elif line[0] == "ROOT":

joint_name.append(line[-1])

joint_parent.append(-1)

elif line[0] == "JOINT":

joint_name.append(line[-1])

joint_parent.append(joint_name.index(joint_stack[-1]))

elif line[0] == "End":

joint_name.append(joint_name[-1]+"_end")

joint_parent.append(joint_name.index(joint_stack[-1]))

elif line[0] == "OFFSET":

joint_offset.append([float(line[1]), float(line[2]), float(line[3])])

elif line[0] == "{":

joint_stack.append(joint_name[-1])

elif line[0] == "}":

joint_stack.pop()

joint_offset = np.array(joint_offset).reshape(-1, 3)

return joint_name, joint_parent, joint_offset

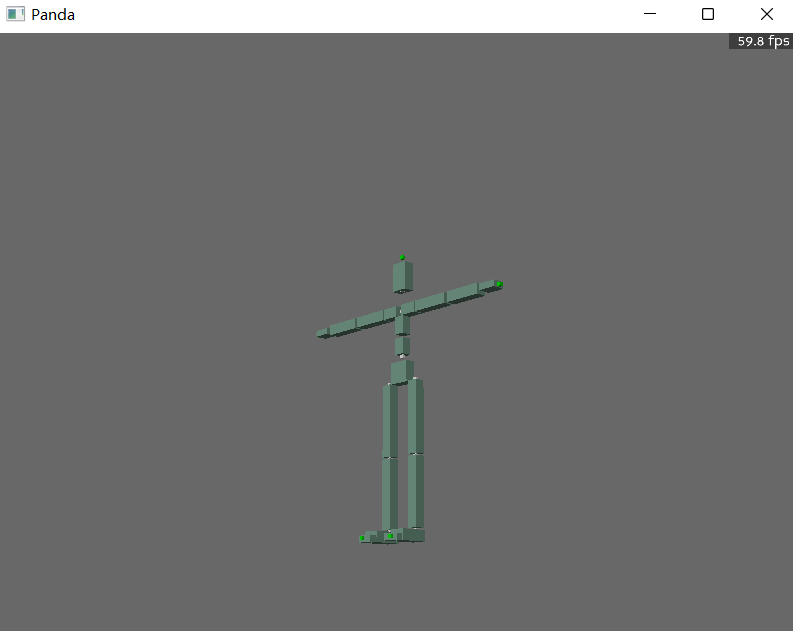

Part 2:前向运动学计算

由于我以前学过机器人学,所以这部分的内容就很简单了,关键在于避免搞错下标就好

def part2_forward_kinematics(joint_name, joint_parent, joint_offset, motion_data, frame_id):

"""请填写以下内容

输入: part1 获得的关节名字,父节点列表,偏移量列表

motion_data: np.ndarray,形状为(N,X)的numpy数组,其中N为帧数,X为Channel数

frame_id: int,需要返回的帧的索引

输出:

joint_positions: np.ndarray,形状为(M, 3)的numpy数组,包含着所有关节的全局位置

joint_orientations: np.ndarray,形状为(M, 4)的numpy数组,包含着所有关节的全局旋转(四元数)

Tips:

1. joint_orientations的四元数顺序为(x, y, z, w)

2. from_euler时注意使用大写的XYZ

"""

joint_positions = []

joint_orientations = []

frame_data = motion_data[frame_id]

frame_data = frame_data.reshape(-1, 3)

# calculate all quaternion

quaternion = R.from_euler('XYZ', frame_data[1:], degrees=True).as_quat()

# end joint don't have motion data, we will create one here

end_index = []

for i in joint_name:

if "_end" in i:

end_index.append(joint_name.index(i))

for i in end_index:

quaternion = np.insert(quaternion, i, [0,0,0,1], axis=0)

for index, parent in enumerate(joint_parent):

if parent == -1:

joint_positions.append(frame_data[0])

joint_orientations.append(quaternion[0])

else:

# calculate global rotation and postion

# Q_{i} = Q_{i-1} R_{i}

# p_{i+1} = p_{i} + Q_{i} l_{i}

quat_i = R.from_quat(quaternion[index])

quat_p = R.from_quat(quaternion[parent])

# quat = R.from_quat(quaternion)

rotation = R.as_quat(quat_i * quat_p) # q_{2} q_{1} [v] (q_{2} q_{1})^* : combine rotation

joint_orientations.append(rotation)

joint_orientations_quat = R.from_quat(joint_orientations)

offset_rotation = joint_orientations_quat[parent].apply(joint_offset[index])

joint_positions.append(joint_positions[parent] + offset_rotation)

joint_positions = np.array(joint_positions)

joint_orientations = np.array(joint_orientations)

return joint_positions, joint_orientations

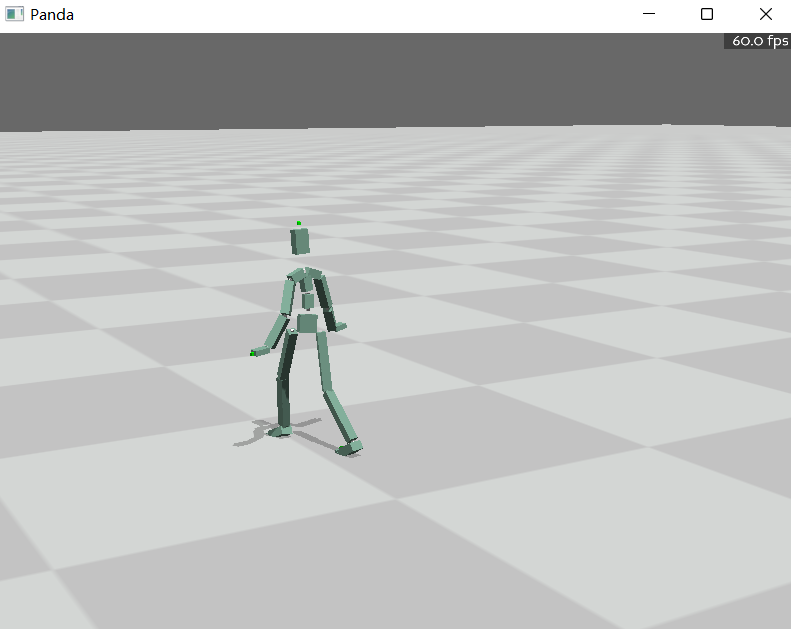

Part 3:运动重定向

这个部分内容主要是从A pose变成T pose,同时需要注意,虽然关节数量与名称没有变化,但是关节顺序改变了,因此需要对BVH的Motion Data进行重新排序,然后输出数据就可以了。需要注意的是,根节点的CHANNEL有6个,前三个是位置数据,重排序时需要将这个部分额外处理。本文中的重定向代码仅可以用于作业,不具有通用性。

课程作业链接给的外部数据集,由于需要使用git lfs工具,github提示我需要充值,免费流量不够,我就暂时放弃这个部分的工作了。

def part3_retarget_func(T_pose_bvh_path, A_pose_bvh_path):

"""

将 A-pose的bvh重定向到T-pose上

输入: 两个bvh文件的路径

输出:

motion_data: np.ndarray,形状为(N,X)的numpy数组,其中N为帧数,X为Channel数。retarget后的运动数据

Tips:

两个bvh的joint name顺序可能不一致哦(

as_euler时也需要大写的XYZ

"""

motion_data = []

motion_dict = {}

joint_remove_A = []

joint_remove_T = []

joint_name_T, joint_parent_T, joint_offset_T = part1_calculate_T_pose(T_pose_bvh_path)

joint_name_A, joint_parent_A, joint_offset_A = part1_calculate_T_pose(A_pose_bvh_path)

motion_data_A = load_motion_data(A_pose_bvh_path)

root_position = motion_data_A[:, :3]

motion_data_A = motion_data_A[:, 3:]

motion_data = np.zeros(motion_data_A.shape)

for i in joint_name_A:

if "_end" not in i:

joint_remove_A.append(i)

for i in joint_name_T:

if "_end" not in i:

joint_remove_T.append(i)

for index, name in enumerate(joint_remove_A):

motion_dict[name] = motion_data_A[:, 3*index:3*(index+1)]

for index, name in enumerate(joint_remove_T):

# simple convert from A pose to T pose

if name == "lShoulder":

motion_dict[name][:, 2] -= 45

elif name == "rShoulder":

motion_dict[name][:, 2] += 45

motion_data[:, 3*index:3*(index+1)] = motion_dict[name]

motion_data = np.concatenate([root_position, motion_data], axis=1)

return motion_data